Two group members attended the 5th International Conference on System Reliability and Safety Engineering (SRSE 2023) in Beijing, China

Two group members attended the 5th International Conference on System Reliability and Safety Engineering (SRSE 2023) in Beijing, China from October 20-23, 2023, conjunction with the annual meeting of Institute for Quality and Reliability (IQR), Tsinghua University. The conference is sponsored by Tsinghua University, supported by National University of Singapore, organized by Institute for Quality and Reliability, Tsinghua University, co-organized by Department of Industrial Engineering, Tsinghua University, patrons with Beijing Institute of Technology, Harbin Institute of Technology, Nanjing University of Science and Technology, Qingdao University, Shanghai University, Shanghai Jiao Tong University, Northwestern Polytechnical University, Sun Yat-sen University, City University of Hong Kong, University of Alberta, etc.

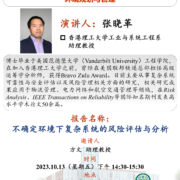

Dr. Xiaoge Zhang delivered a talk on “Safety assessment and risk analysis of complex systems under uncertainty” at Nanjing University, China

This talk showcases two different strategies to assess and analyze the safety of air transportation system. In the first place, considering the rich information in the historical aviation accident events, we analyzed the accidents reported in the National Transporation Safety Board (NTSB) over the past two decades, and developed a large-scale Bayesian network to model the causal relationships among a variety of factors contributing to the occurrence of aviation accidents. The construction of Bayesian network greatly facilitates the root cause diagnosis and outcome analysis of aviation accident. Next, we analyze how to leverage deep learning to forecast flight trajectory. Using Bayesian neural network, we fully characterize the effect of exogenous variables on the flight trajectory. The predicted trajectory is then expanded to multiple flights, and used to assess safety based on horizontal and vertical separation distance between two flights, thus enabling real-time monitoring of in-flight safety.

Dr. Xiaoge Zhang delivered a talk on “A Review on Uncertainty Quantification of Neural Network and Its Application for Reliable Detection of Steel Wire Rope Defects” at Hunan University, China

This talk provides a holistic lens on emerging uncertainty quantification (UQ) methods for ML models with a particular focus on neural networks and gives a tutorial-style description of several state-of-the-art UQ methods: Gaussian process regression, Bayesian neural network, neural network ensemble, and deterministic UQ methods focusing on spectral-normalized neural Gaussian process (SNGP). Established upon the mathematical formulations, we subsequently examine the soundness of these UQ methods quantitatively and qualitatively (by a toy regression example) to examine their strengths and shortcomings from different dimensions. Based on the findings of the comparison, we exploit the advantages of SNGP in UQ and develop an uncertainty-aware deep neural network to detect the defects of steel wire rope. Computational experiments and comparisons with state-of-the-art models suggest that the principled uncertainty quantified by SNGP not only substantially enhances the prediction performance, but also provides an essential layer of protection for neural network against out-of-distribution data.